Why Data Centers Are Measured in MW/GW

Editor’s Note: Welcome to TECH, UNPACKED - our new explainer series built for anyone who wants to follow Asia’s tech story without getting lost in jargon.

We cover a lot of ground at Asia Tech Lens: AI, fintech, semiconductors, data infrastructure, and everything in between. But we’ve realized that many readers want a quick, clear way to understand the fundamentals before diving into the deeper analysis. That’s what this series is for.

Each edition takes one core idea and breaks it down the way a colleague would - straightforward, practical, and free of unnecessary complexity.

If ATL is about helping people make sense of Asia’s tech landscape, TECH, UNPACKED is the on-ramp.

In Plain English

Ever been confused when someone describes a data center as “a 50-MW site” or “a 1-GW campus”? It sounds like a power plant…and that’s intentional!

A data center is defined by how much electricity it can handle safely, non‑stop, not by how big the building is. In simple terms, it’s like saying, “this place has enough power to run X thousand fridges and air‑conditioners 24/7 without blowing a fuse or overheating,” and that number is what people quote.

Common Misunderstanding

Many people assume MW/GW describe how much computation a data center can produce. They don’t. They measure how much electrical power the facility can draw and distribute at peak load.

Why It Exists

Traditional building metrics stopped being useful once data centers evolved into industrial-scale power consumers. The real constraint isn’t space, it’s how much electricity the site can draw, cool, and distribute safely. As computing hardware grew more power-dense, the industry needed a single metric that captured true operating capacity. MW/GW emerged because only power, not square footage, reveals what a site can support.

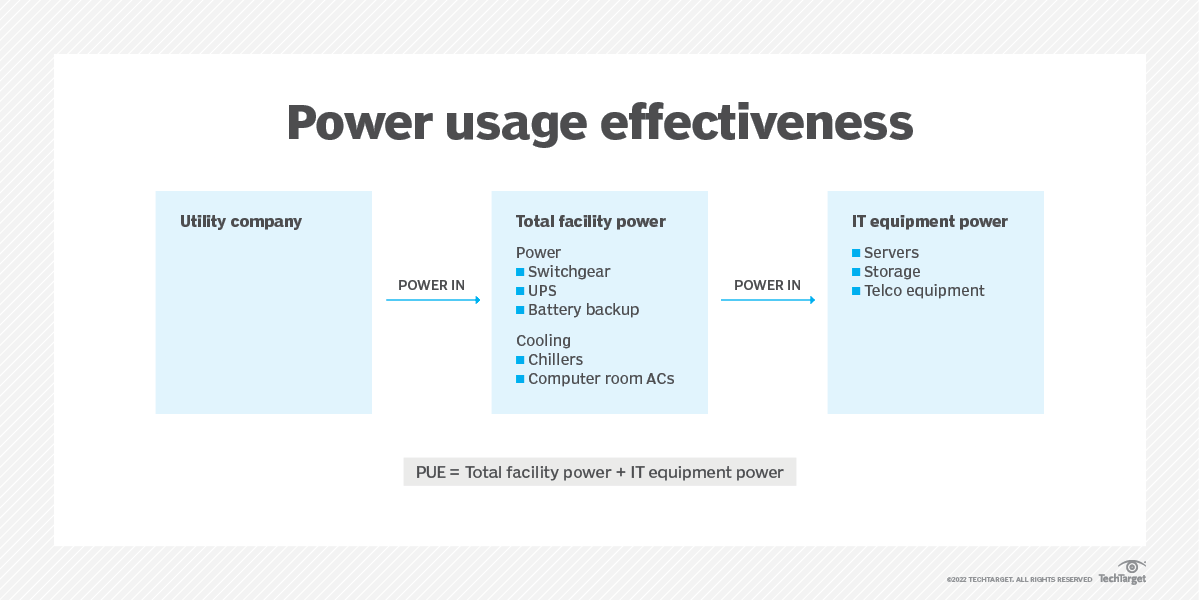

And this is also why another metric, PUE or Power Usage Effectiveness, shows up often. It simply tells you how efficiently the data center uses its power. PUE of 1.2, for example, means that for every 1.2 units of power drawn, 1 unit reaches the servers and 0.2 goes to overhead like cooling and power delivery.

Why It Matters Now

It matters now because AI has completely changed the power equation - pun totally intended. Modern AI systems like ChatGPT and DeepSeek draw huge amounts of electricity, and it starts at the rack level. For a layman: a rack is basically a tall metal cabinet, about the size of a refrigerator, filled with servers. When those servers are equipped with AI chips (GPUs), that single cabinet can consume as much power as a small office floor, and the cooling needs only add to the load.

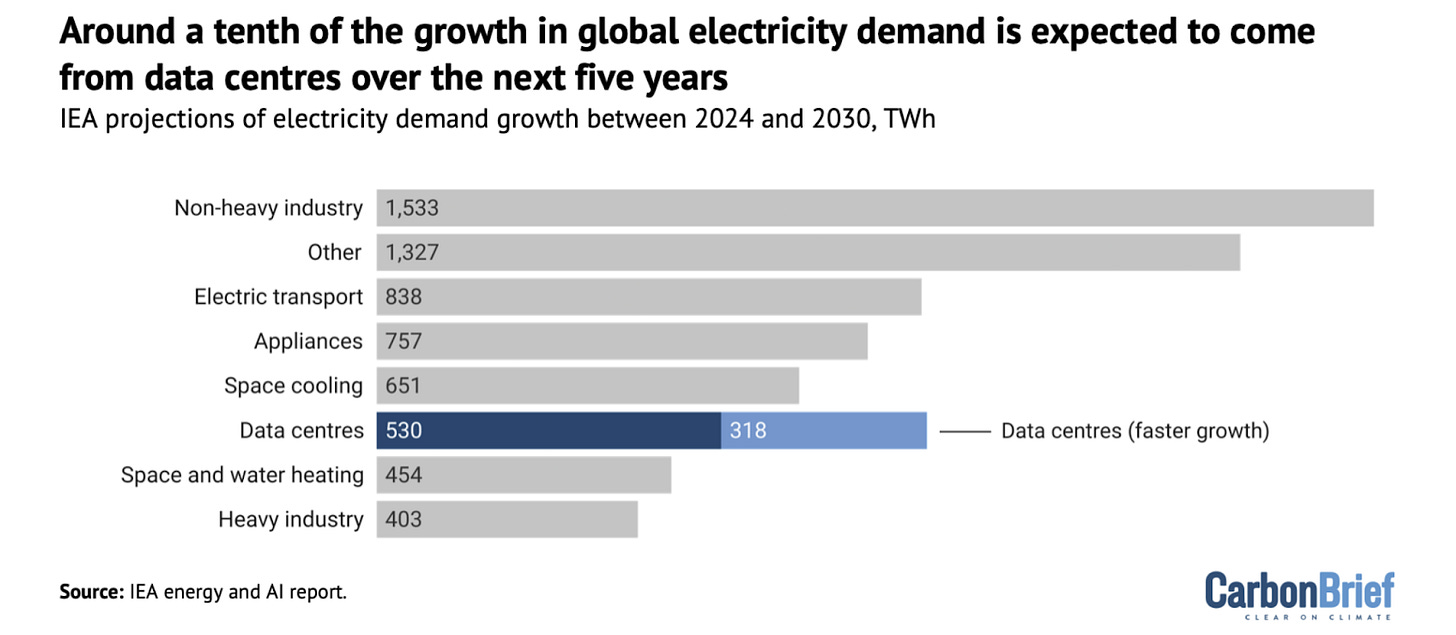

Multiply that across hundreds of racks, and the numbers get extreme very quickly. According to the International Energy Agency (IEA), data centers are responsible for about 1.5%, or 415 Terawatt-Hours (TWh), of the world’s total yearly electricity consumption.

That shift is why power has become the bottleneck. Governments are rethinking where new data centers can go, cloud providers are building next to strong energy sources, and regions with spare power capacity—from Johor in Malaysia to Visakhapatnam in India—are emerging as AI infrastructure hotspots.

The fact of the matter is: you can’t scale AI unless you can power it.

And that’s why data centers speak in megawatts, not square meters.